i have followed AI development long enough to see one pattern repeat. Each generation of models is judged by short-term capability, while its long-term impact unfolds more quietly across institutions, labor, and culture. How AI Models May Evolve Over the Next Decade is less about predicting a single breakthrough and more about understanding structural direction.

Within the first hundred words, the central question is clear. What will AI models become as they move beyond text generation and into decision support, coordination, and autonomy? The answer is not a sudden leap to artificial general intelligence, but a steady expansion of scope, responsibility, and integration.

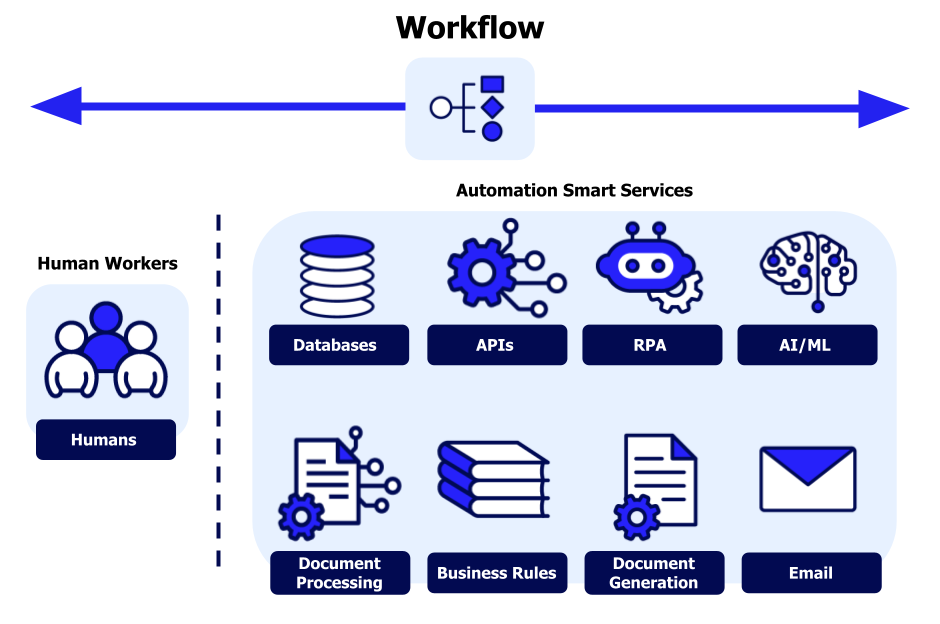

Over the next ten years, AI models will likely shift from tools that respond to prompts into systems that participate in workflows, anticipate needs, and operate under defined constraints. I have observed early versions of this transition in enterprise deployments where models already manage scheduling, triage information, and recommend actions with limited supervision.

This evolution matters because it changes who holds responsibility. As models become more capable, societies will demand stronger governance, clearer accountability, and better alignment with human values. The technical story cannot be separated from the economic and cultural one.

This article explores how AI models may evolve over the next decade by examining capability growth, architectural change, governance pressures, and the human systems that will adapt around them.

From Prediction Engines to Contextual Reasoners

i remember when AI models were described primarily as pattern matchers. That framing is no longer sufficient. Over the next decade, models will increasingly function as contextual reasoners, capable of maintaining longer horizons of understanding across time and tasks.

Technically, this shift is driven by better memory mechanisms, retrieval systems, and multimodal context integration. Socially, it is driven by demand. Organizations want systems that understand situations, not just sentences.

Contextual reasoning does not imply human-like understanding. It implies improved situational awareness within bounded domains. In practice, this means models that remember prior decisions, adapt to user preferences, and incorporate environmental signals.

A researcher at DeepMind noted in 2024 that “progress in AI reasoning comes less from raw scale and more from how models organize information.” That insight frames the next decade well.

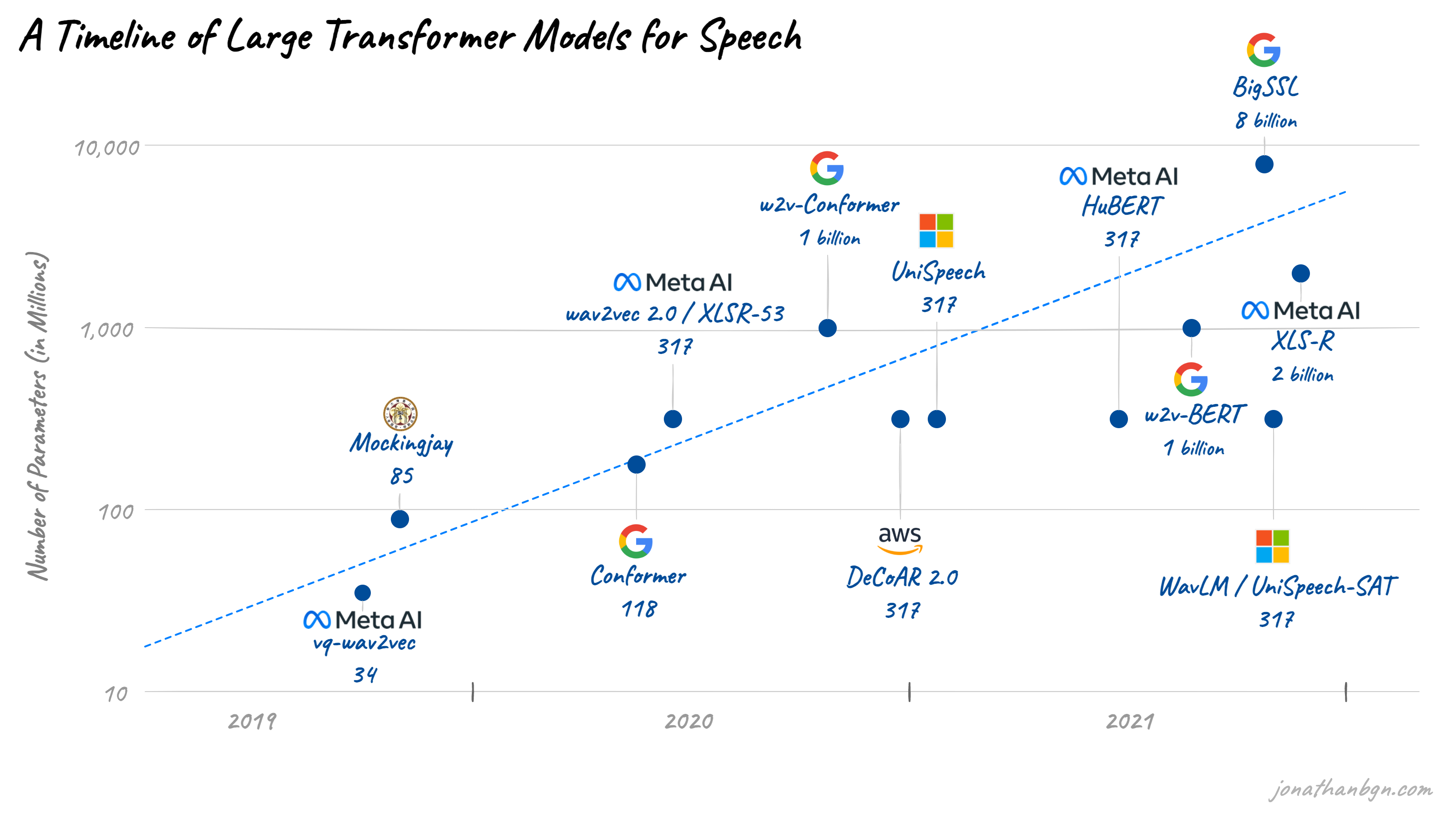

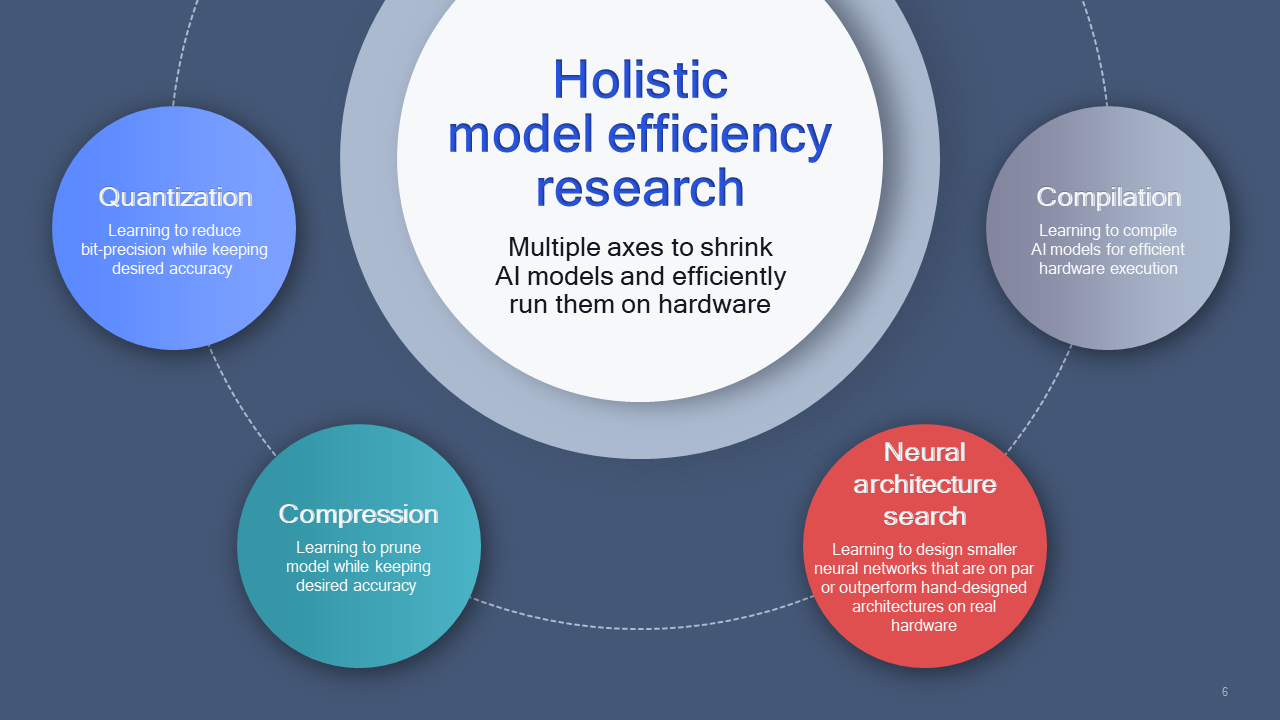

Scaling Will Slow, Systems Thinking Will Accelerate

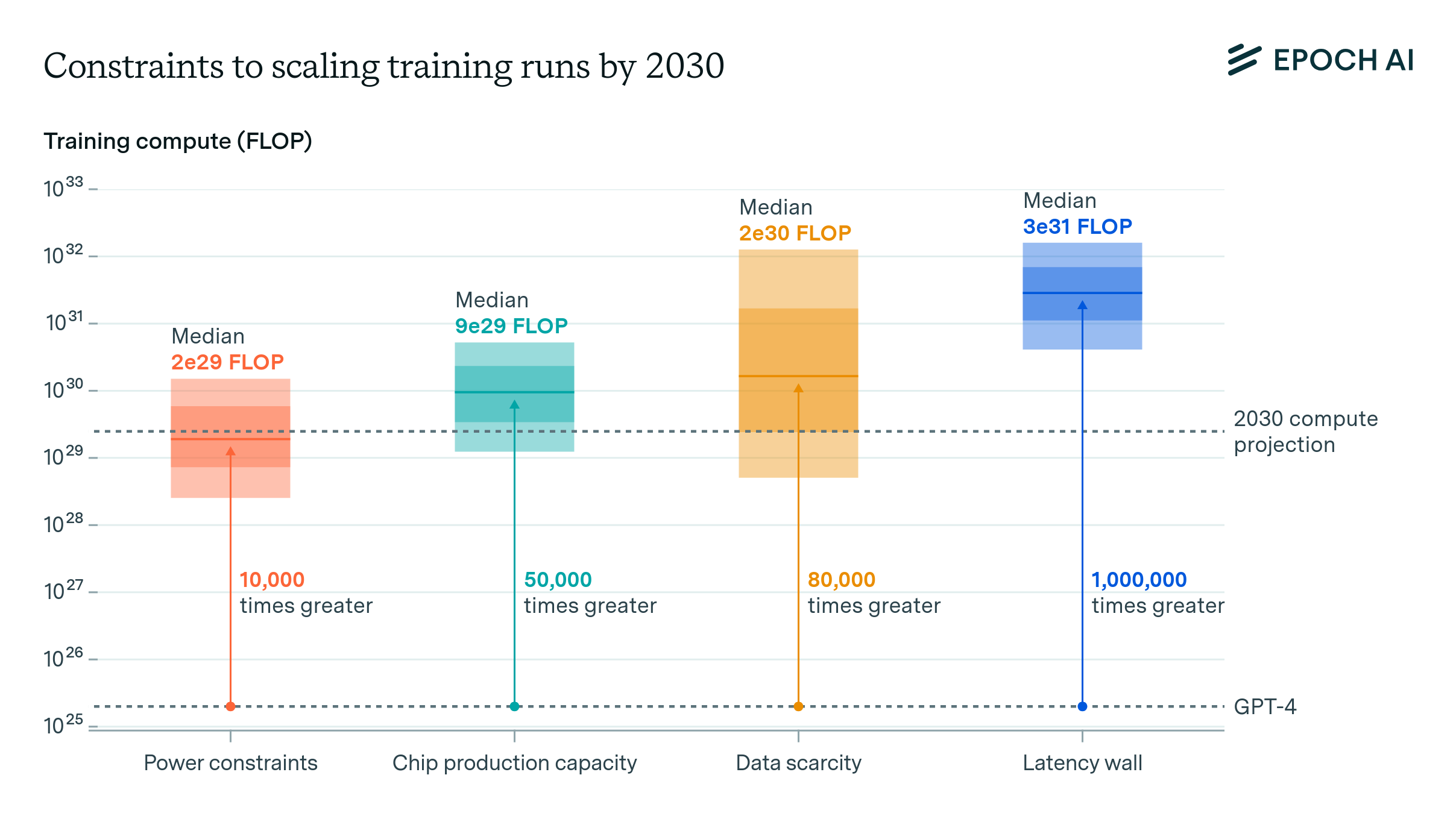

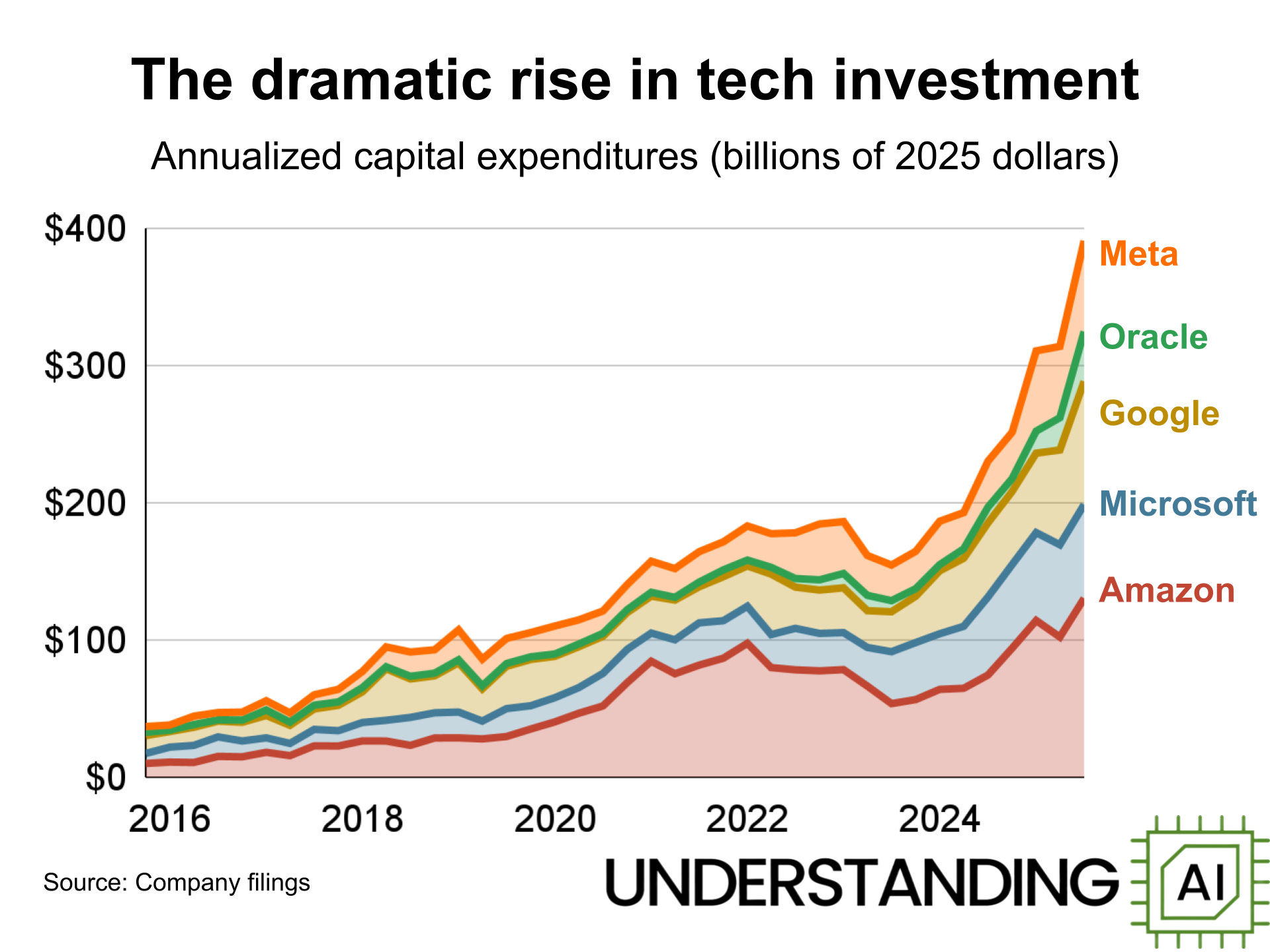

i have sat in discussions where leaders quietly acknowledged what public roadmaps rarely emphasize. Infinite scaling is economically and environmentally constrained. Over the next decade, AI progress will rely less on brute-force parameter growth and more on system-level efficiency.

This does not mean models will stop improving. It means improvement will come from better architectures, specialization, and orchestration. Smaller models working together will replace monolithic systems in many applications.

Energy costs, chip supply chains, and regulatory scrutiny will shape design choices. Efficiency becomes a competitive advantage rather than a technical afterthought.

A 2025 report from the International Energy Agency highlighted rising energy demands from data centers. That pressure will push AI developers toward optimization rather than expansion.

AI Models as Decision Support Infrastructure

One of the most consequential shifts i anticipate is the normalization of AI as decision support infrastructure. Models will increasingly sit inside systems that guide choices rather than generate content.

In healthcare, finance, logistics, and governance, AI models will filter options, flag risks, and simulate outcomes. Humans remain accountable, but AI shapes the decision space.

I have observed this already in policy analysis tools where models summarize scenarios and consequences faster than any team could manually. The model does not decide. It frames.

This evolution raises ethical questions. Who defines the objectives. Whose values are encoded. These questions will define legitimacy.

Understanding how AI models may evolve over the next decade requires recognizing this subtle but profound role shift.

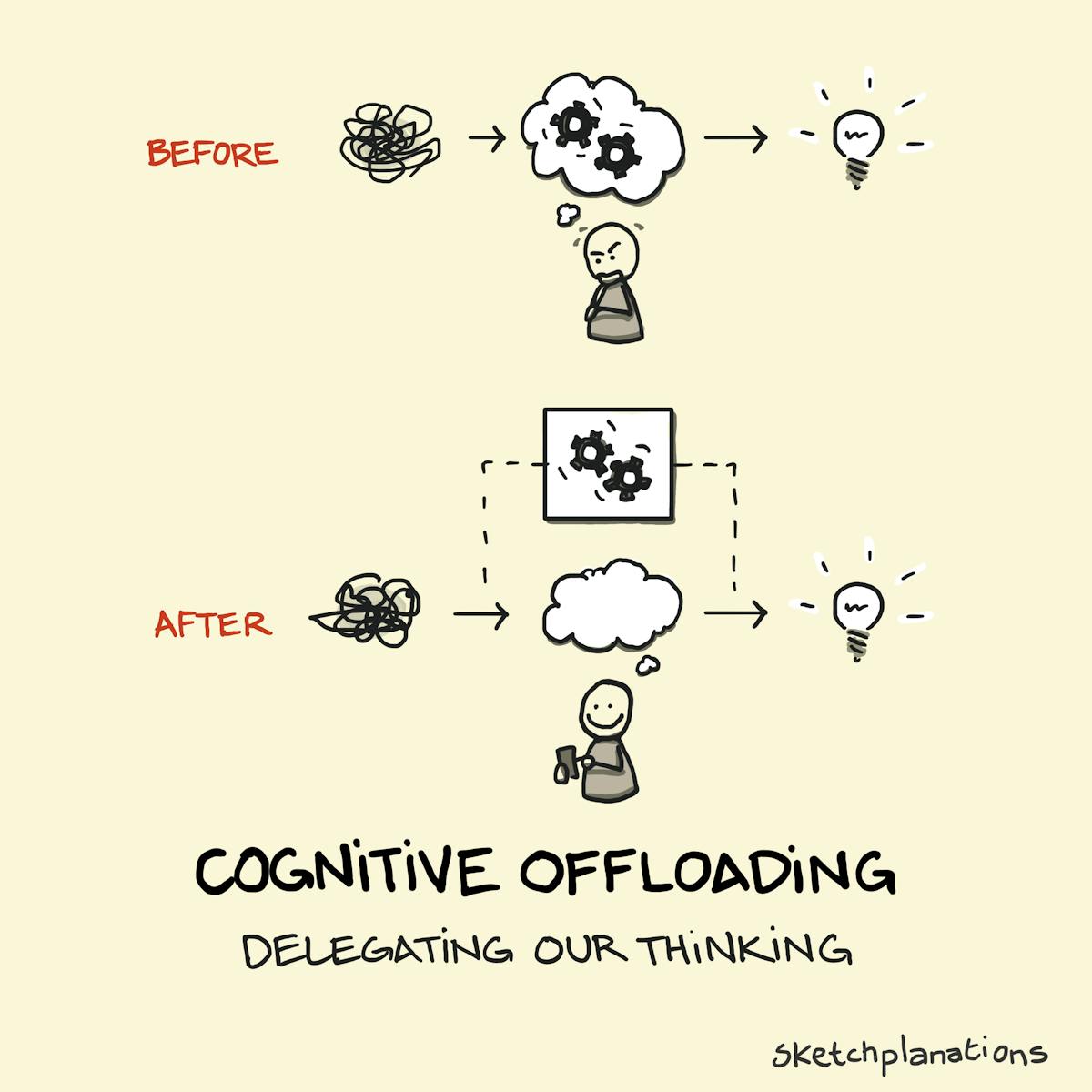

Human Skill Shifts and Cognitive Offloading

As models become more capable, human skills will shift. This is not a story of replacement but redistribution.

Routine cognitive tasks will be increasingly offloaded. Interpretation, judgment, and accountability become more central. I have seen early signals in workplaces where junior roles change fastest, not senior ones.

Education systems will adapt unevenly. Those that teach how to work with AI systems will thrive. Those that treat AI as a threat will struggle.

An economist at MIT argued in 2024 that “AI changes the marginal value of human attention.” Over the next decade, attention becomes a scarce resource shaped by machine support.

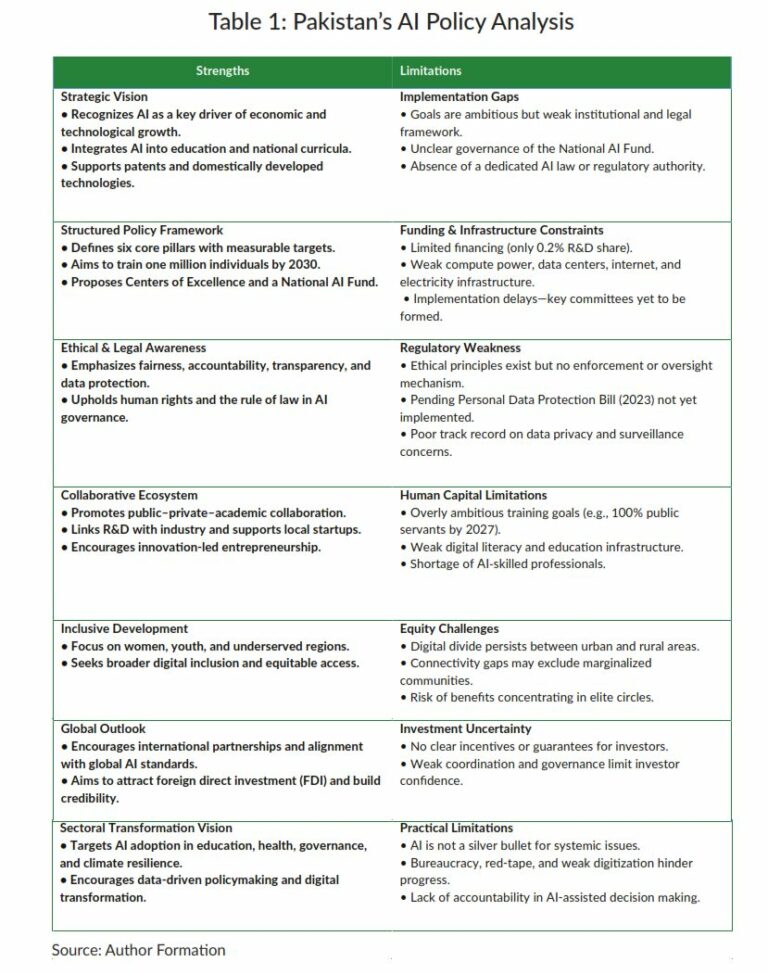

Governance Moves from Policy to Practice

Governance will move from abstract principles to operational constraints. This transition is already underway.

By 2035, most advanced AI systems will operate under explicit rules for data usage, auditability, and risk management. Compliance will shape architecture.

I have reviewed early governance frameworks that failed because they treated AI as static software. Future frameworks treat AI as evolving systems requiring ongoing oversight.

Institutions such as OECD and the European Union are already embedding lifecycle accountability into regulation.

Multimodal Models Become the Default

The next decade will normalize multimodal AI. Text-only models will feel incomplete.

Models will ingest images, audio, sensor data, and structured information as a single context. This expands usefulness but also complexity.

From my perspective, multimodality is less about novelty and more about realism. The world is not text-based. AI systems that reflect that reality integrate more naturally.

This also increases governance challenges. More data types mean more privacy and consent considerations.

Economic Concentration and Model Access

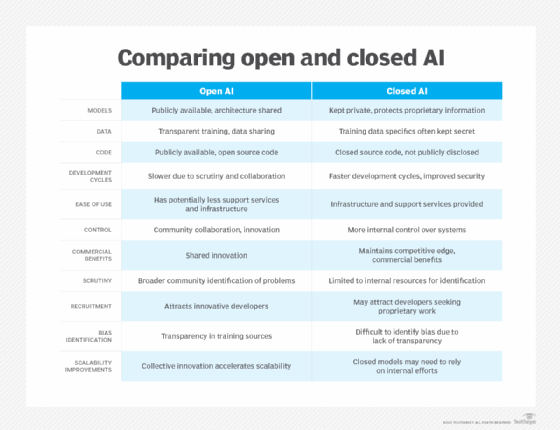

Economic structure matters. Over the next decade, AI model development may concentrate among fewer actors due to capital intensity.

At the same time, open models will persist as a counterbalance. Hybrid ecosystems will emerge where foundational models are centralized, but applications are decentralized.

I have seen small firms innovate rapidly on top of shared models, while core infrastructure remains capital-heavy.

How AI models may evolve over the next decade depends not just on science, but on market structure.

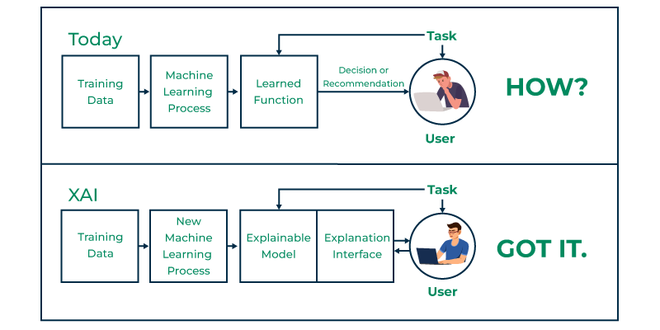

Trust, Transparency, and Social Acceptance

Public trust will shape adoption more than capability. Systems that cannot explain themselves will face resistance.

Explainability will improve, not because models become simple, but because interfaces become better at communicating uncertainty and reasoning.

In pilots i have observed, users accept AI guidance when systems clearly state limits. Overconfidence erodes trust quickly.

Social acceptance is earned slowly and lost fast.

Timelines of Likely Model Evolution

| Period | Likely Shift |

|---|---|

| 2026–2028 | Efficiency focused architectures |

| 2028–2031 | Widespread decision support adoption |

| 2031–2035 | Strong governance integration |

These are directional, not predictive. Progress will be uneven across regions and sectors.

What Will Not Change

Despite rapid progress, some limits will remain. AI models will not possess human values inherently. They will reflect the objectives and data they are given.

Human accountability will remain central. Responsibility cannot be delegated to systems.

This continuity is as important as change.

Takeaways

- AI models will become more contextual and integrated

- Scaling gives way to efficiency and orchestration

- Decision support replaces content novelty

- Human skills shift toward judgment and oversight

- Governance becomes operational, not theoretical

- Trust and transparency determine adoption

Conclusion

i approach the future of AI with cautious realism. How AI Models May Evolve Over the Next Decade is not a story of sudden intelligence emergence, but of gradual integration into social systems.

The most profound changes will not come from technical benchmarks, but from how institutions adapt. Models will become quieter, more embedded, and more consequential.

Those who understand this evolution as a systems transition rather than a race for capability will be best positioned to shape outcomes that serve society rather than disrupt it.

Read: AI Governance Maturity Model: How Organizations Progress From Risk to Readiness

FAQs

Will AI models become autonomous decision-makers?

They will increasingly support decisions, but human accountability remains essential.

Will model sizes continue to grow?

Growth will slow as efficiency and specialization take priority.

How will regulation affect AI evolution?

Regulation will shape architecture, deployment, and accountability.

Will open models disappear?

No. Open and closed ecosystems will coexist.

Is general AI likely within ten years?

There is no consensus. Most progress will be applied and incremental.

References

Rahman, A. (2024). AI systems and institutional change. AI & Society, 39(2), 145–158.

OECD. (2023). AI governance and accountability frameworks. https://www.oecd.org

International Energy Agency. (2025). Data centres and AI energy outlook. https://www.iea.org

DeepMind. (2024). Advances in multimodal reasoning. https://www.deepmind.com