Introduction

i have sat in boardrooms where AI was described as both a growth engine and a legal risk in the same sentence. That tension explains why the ai governance maturity model has become one of the most searched governance concepts of the last two years. Leaders are no longer asking whether they should govern AI. They are asking how mature their governance actually is.

In the first 100 words, the intent is clear. Organizations want to understand where they stand today, how exposed they are to risk, and what practical steps move them toward responsible, scalable AI use. An AI governance maturity model provides that structure. It benchmarks current capabilities across strategy, people, processes, ethics, and technology, then maps a path from informal experimentation to systematic, accountable deployment.

This shift is not theoretical. Since 2023, regulators in the United States, European Union, and Asia have signaled that AI accountability will increasingly mirror financial and cybersecurity oversight. Enterprises deploying AI without governance are discovering that speed alone is no longer rewarded.

From firsthand work advising leadership teams on AI oversight, i have seen that maturity models change the conversation. Instead of debating abstract ethics, boards focus on concrete questions. Who owns model risk. How is bias monitored. What happens when systems fail.

This article explains how AI governance maturity models work, why most organizations sit in the middle stages today, and how structured progression creates both regulatory confidence and long-term competitive advantage.

Why AI Governance Has Become a Board-Level Issue

AI governance moved into the boardroom once AI systems began influencing credit decisions, hiring, healthcare triage, and content moderation at scale. These are no longer technical experiments. They are business critical systems with legal, ethical, and reputational consequences.

The rise of generative models accelerated this shift. Unlike traditional software, AI systems adapt, learn, and sometimes behave unpredictably. That volatility demands oversight frameworks that evolve over time.

Governance scholar Cary Coglianese has argued that “AI requires dynamic governance rather than static rules.” Maturity models reflect this idea. They emphasize progression, not compliance checklists.

In my experience, boards struggle most when AI decisions are fragmented across teams. Marketing deploys one model, operations another, HR a third. Without centralized oversight, risk accumulates invisibly.

AI governance maturity models give boards a shared language. They clarify accountability and align AI initiatives with enterprise risk tolerance.

What an AI Governance Maturity Model Actually Measures

At its core, an AI governance maturity model evaluates how systematically an organization manages AI risk and value. It does not assess model accuracy. It assesses organizational readiness.

Most models examine five recurring dimensions.

- Strategy and alignment

- People and accountability

- Processes and lifecycle controls

- Ethics and risk management

- Technology and monitoring infrastructure

These dimensions appear across public and private frameworks because they reflect how organizations actually operate. Weakness in any one area limits overall maturity.

I have seen technically sophisticated teams score low on governance maturity because leadership never defined ownership or escalation paths. Conversely, less advanced teams sometimes outperform peers because they embedded AI oversight early.

Maturity models surface these gaps clearly.

Leading AI Governance Frameworks in Use Today

Several respected institutions have published governance maturity frameworks. Each reflects a different audience and emphasis.

Popular AI Governance Frameworks

| Framework | Primary Focus | Structure | Best Fit |

|---|---|---|---|

| NIST AI RMF | Risk management | Govern, Map, Measure | Enterprises |

| Berkeley CMR Matrix | Board oversight | Reactive to Transformative | Corporate boards |

| CNA Government Model | Public sector AI | Initial to Optimized | Agencies |

| IEEE USA Assessment | Flexible benchmarking | Questionnaire based | Mixed orgs |

Each framework answers a slightly different question. NIST focuses on operational risk. Berkeley emphasizes governance at the board and executive level. Government models stress accountability and auditability.

In practice, organizations often combine elements rather than adopting a single framework wholesale.

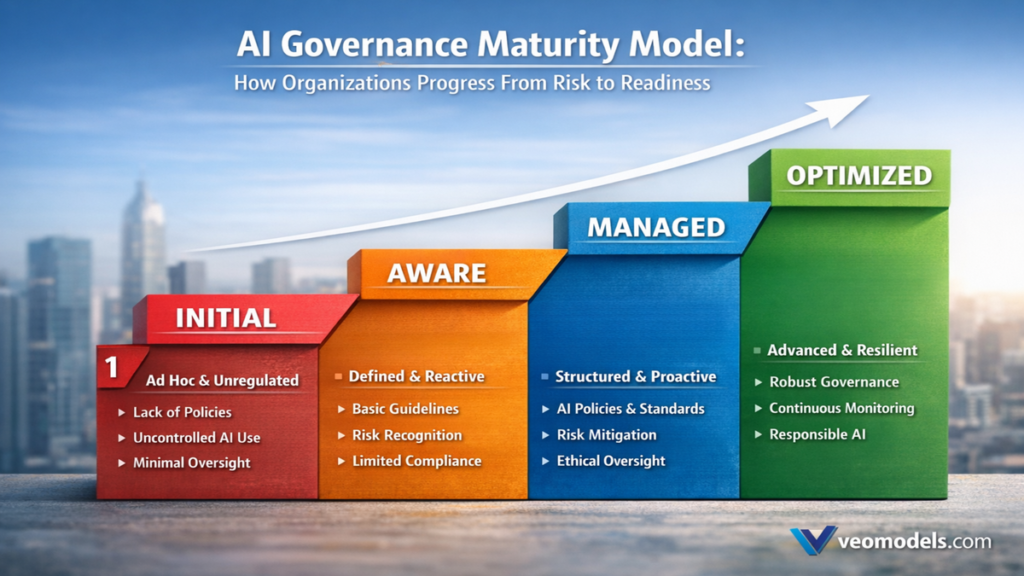

The Typical Five-Stage Maturity Progression

Across frameworks, maturity tends to follow a familiar arc. Language differs, but progression is consistent.

Level 1: Initial

AI is used opportunistically. There are no policies, no inventory of models, and no defined accountability.

Level 2: Repeatable

Basic policies emerge. Teams document models, but governance is siloed and inconsistent.

Level 3: Defined

Standardized processes exist. Risk assessments and lifecycle controls are applied consistently.

Level 4: Managed

Monitoring is automated. Metrics inform decisions. Governance is proactive rather than reactive.

Level 5: Optimized

Ethics and accountability are embedded culturally. Continuous improvement is expected.

From my advisory work, most organizations in 2026 sit between Levels 2 and 3. Few have reached managed or optimized maturity.

Why Most Organizations Stall at Level Two

Level two feels comfortable. Policies exist. Documentation exists. Leadership believes governance is “handled.” But in reality, controls are fragile.

The barrier is usually organizational, not technical. Governance requires cross functional collaboration between legal, compliance, IT, and business leaders. That coordination is difficult.

AI ethicist Timnit Gebru has noted that “governance fails when responsibility is diffused.” Maturity models expose that diffusion.

I have observed that organizations move past Level 2 only after a trigger event. That may be regulatory scrutiny, a model failure, or investor pressure. Proactive progression remains rare but is increasing.

How Board-Level Maturity Is Assessed

The Berkeley AI Governance Maturity Matrix focuses specifically on board oversight. It scores organizations across five dimensions using a simple three point scale.

Sample Board-Level Assessment

| Dimension | Reactive (1) | Proactive (2) | Transformative (3) |

|---|---|---|---|

| Strategy | No AI vision | Basic roadmap | AI drives core value |

| People | No expertise | External advisors | Internal AI center |

| Processes | Ad hoc reviews | Standard audits | Real-time oversight |

| Ethics | No policy | Guidelines exist | Ethics board active |

| Culture | AI siloed | Cross functional | AI first mindset |

Scores are averaged to determine overall maturity. This approach resonates with boards because it mirrors familiar governance assessments used in cybersecurity and finance.

Running a Practical Maturity Assessment

A maturity assessment does not require months of consulting. Most effective assessments happen in under an hour.

The process typically includes:

- Gathering key stakeholders

- Scoring each dimension honestly

- Identifying the largest gaps

- Defining a short term roadmap

In workshops i have facilitated, the most valuable outcome is alignment. Leaders often disagree initially on scores. That disagreement surfaces hidden assumptions.

Once gaps are visible, action planning becomes easier. Moving from Level 2 to Level 3 is usually achievable within 12 to 18 months with focused effort.

Why Regulators and Investors Care About Maturity

Regulators increasingly expect evidence of governance, not just assurances. Maturity models provide that evidence.

Under the EU AI Act and emerging US guidance, organizations must demonstrate risk management processes, transparency, and accountability. A documented maturity framework supports compliance.

Investors also pay attention. Governance maturity signals operational discipline. In due diligence conversations i have observed, AI governance now appears alongside cybersecurity and data privacy.

As legal scholar Ryan Calo has written, “AI governance will become a proxy for organizational trustworthiness.” Maturity models formalize that trust.

The Strategic Upside of Higher Maturity

Beyond compliance, higher maturity enables faster innovation. When governance is embedded, teams spend less time debating whether deployment is allowed and more time improving systems.

Level 4 organizations can deploy AI with confidence because monitoring and escalation paths already exist. Failures are detected early and addressed systematically.

From experience, these organizations outperform peers not by avoiding risk, but by managing it intelligently.

Takeaways

- AI governance maturity models assess organizational readiness, not model performance

- Most organizations operate at Level 2 or early Level 3 today

- Board-level oversight is a critical maturity indicator

- Progression requires cultural and organizational change

- Regulators increasingly expect documented governance

- Higher maturity enables safer, faster AI deployment

Conclusion

The ai governance maturity model has become essential because AI itself has become essential. As systems grow more autonomous and influential, informal oversight no longer scales.

Maturity models offer a practical way to move from reactive controls to embedded governance. They replace vague commitments with measurable progress. They also shift governance from fear based restraint to structured enablement.

From firsthand work with organizations at different stages, the pattern is clear. Those that invest early in governance maturity adapt faster, face fewer surprises, and earn greater trust from regulators, investors, and users.

AI will continue to evolve. Governance must evolve with it. Maturity models provide the roadmap.

Read: AI Startups Hiring Remote US West Coast Talent in 2026

FAQs

What is an AI governance maturity model?

It is a framework used to assess how well an organization governs AI across strategy, ethics, processes, and risk management.

Why do organizations need governance maturity assessments?

They help identify gaps, reduce risk, and demonstrate accountability to regulators and stakeholders.

How long does it take to improve maturity?

Most organizations can move one maturity level within 12 to 18 months with focused effort.

Are maturity models required by law?

Not directly, but they support compliance with emerging AI regulations and audits.

Which framework should an organization choose?

The best framework depends on sector and goals. Many organizations combine elements from multiple models.

References

Coglianese, C. (2023). Regulating Artificial Intelligence. University of Pennsylvania Law Review.

NIST. (2024). AI Risk Management Framework. https://www.nist.gov

IEEE. (2023). AI Governance and Ethics Initiatives. https://www.ieee.org

Calo, R. (2022). Artificial Intelligence Policy. Stanford Law Review.