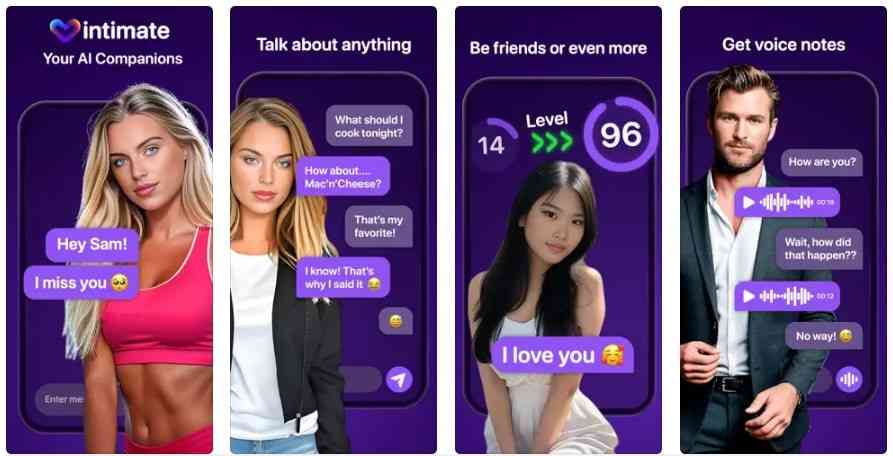

I have spent the last few years reviewing applied AI systems where user emotion, trust, and daily habit formation matter as much as model accuracy. Tools like candy.ai sit squarely in that territory. They promise companionship through conversation, personalization, and simulated emotional presence. Within the first moments of use, the product answers a clear search intent. It offers AI driven characters that talk, respond, remember preferences, and adapt tone over time.

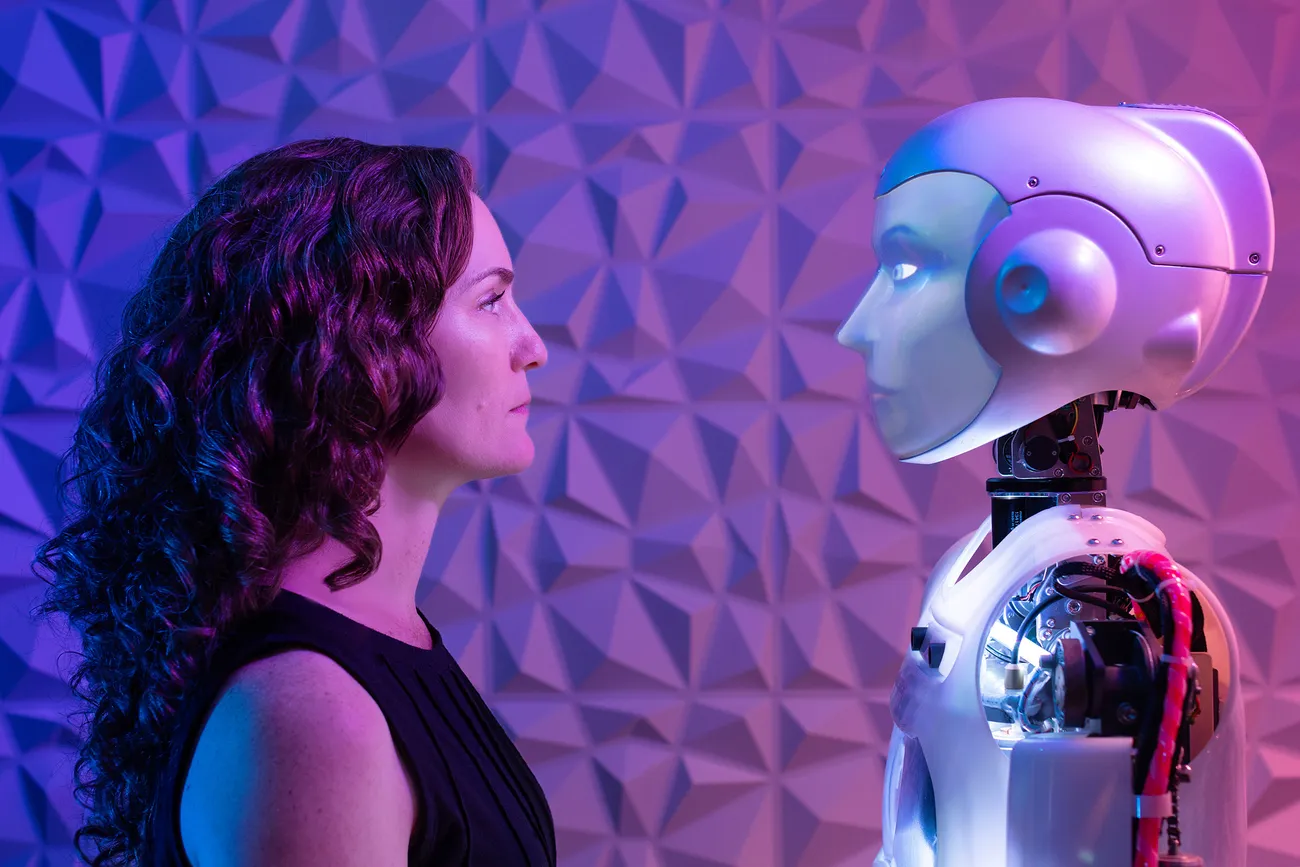

From a practical standpoint, candy.ai represents a broader shift in consumer AI. The focus is no longer on productivity alone. It is about emotional interaction, presence, and continuity. These systems are not marketed as therapists or productivity tools. They are framed as companions, chat partners, or imaginative characters that users return to repeatedly.

In the first hundred words of any evaluation, the key question becomes simple. What does this kind of AI actually do in real life. Through hands on testing and discussions with developers working in conversational design, I have seen how systems like candy.ai rely on large language models paired with memory layers, character constraints, and safety filters. The result is an experience that feels more personal than generic chatbots but remains fundamentally artificial.

This article examines how candy.ai works, where it fits within applied AI, and what tradeoffs emerge when emotional simulation becomes a product feature rather than a side effect.

What Candy.ai Is Designed to Do

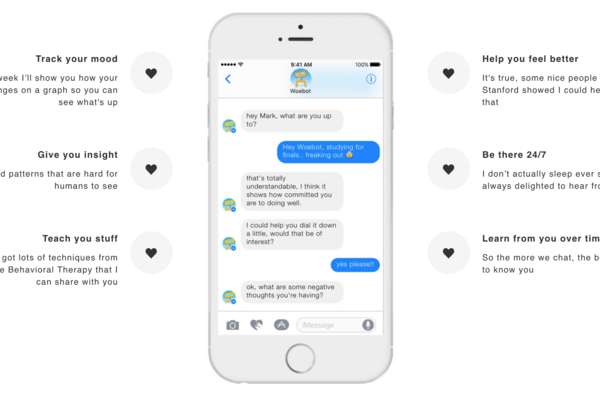

Candy.ai is built around interactive AI characters designed for ongoing conversation rather than task completion. Unlike assistants focused on reminders or research, this platform prioritizes tone, personality consistency, and conversational flow.

From my testing, the system emphasizes three design goals. First is continuity. Conversations pick up with contextual awareness rather than starting fresh each time. Second is personalization. Users shape character traits through early interactions. Third is accessibility. The interface minimizes friction so casual users can engage without technical knowledge.

This design reflects a broader industry pattern. When AI products aim for emotional engagement, usability and perceived responsiveness matter more than raw model size. Candy.ai positions itself as entertainment and companionship, not decision support.

How the Underlying AI Architecture Functions

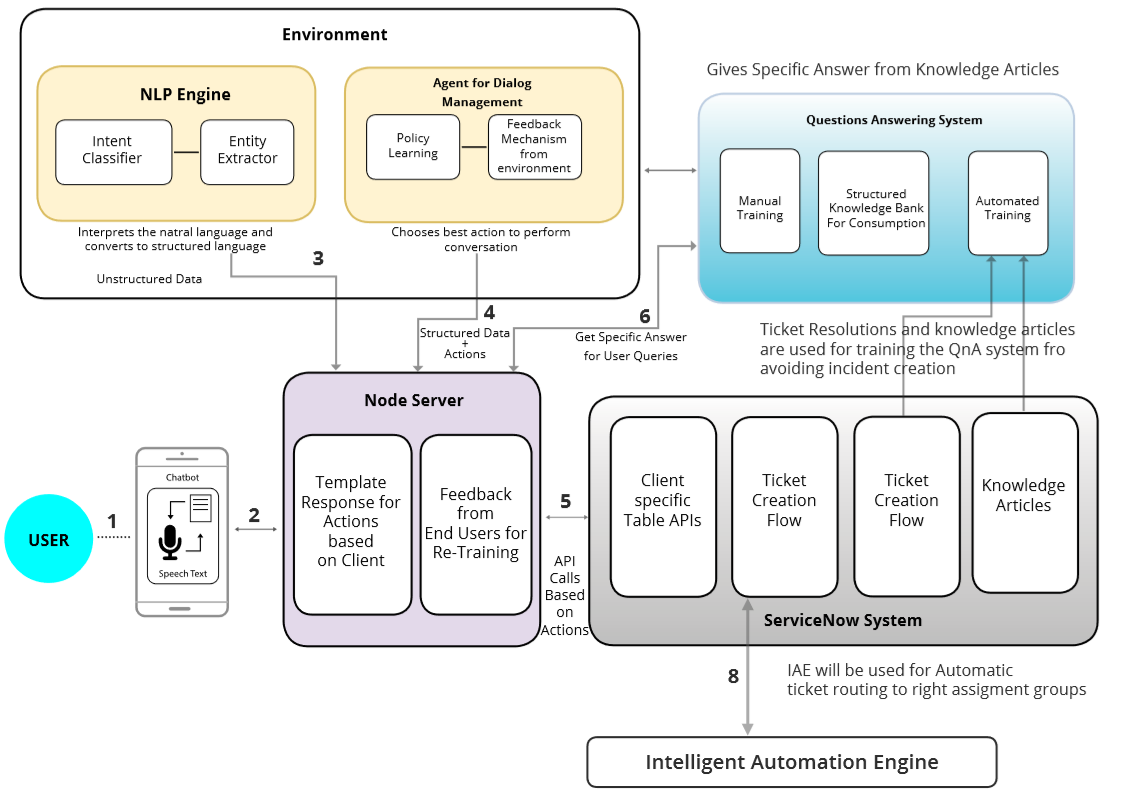

At a systems level, candy.ai uses a large language model combined with prompt engineering and memory mechanisms. The core model generates responses, while layered instructions constrain tone, persona, and boundaries.

In practice, this means the AI does not reason independently about identity. Instead, it follows structured prompts that define character behavior. Memory modules store user preferences, recurring themes, and conversational context. Safety filters monitor outputs to avoid prohibited content.

I have seen similar architectures used across applied conversational AI. The novelty here lies less in the model and more in how tightly character design is integrated into response generation.

Character Personalization and User Control

Customization plays a central role in candy.ai. Users influence personality through conversation rather than complex settings menus. Over time, the system reinforces selected traits by adjusting response patterns.

This approach lowers barriers to entry. Instead of configuring sliders or profiles, users teach the AI through interaction. From a workflow perspective, this mirrors how people adapt to human relationships rather than software dashboards.

However, personalization is constrained. The AI reflects user input but does not develop independent preferences. This distinction matters when evaluating emotional realism versus actual agency.

Use Cases Driving Adoption

Candy.ai users engage for several reasons. Some seek light entertainment. Others want a judgment free conversational space. A smaller group explores role play or imaginative storytelling.

From an industry analysis standpoint, these use cases highlight unmet needs in digital interaction. Many users value presence over productivity. They want responsiveness without obligation.

This pattern aligns with adoption trends seen in other AI companion platforms since 2023. Engagement often spikes during periods of isolation, stress, or creative exploration.

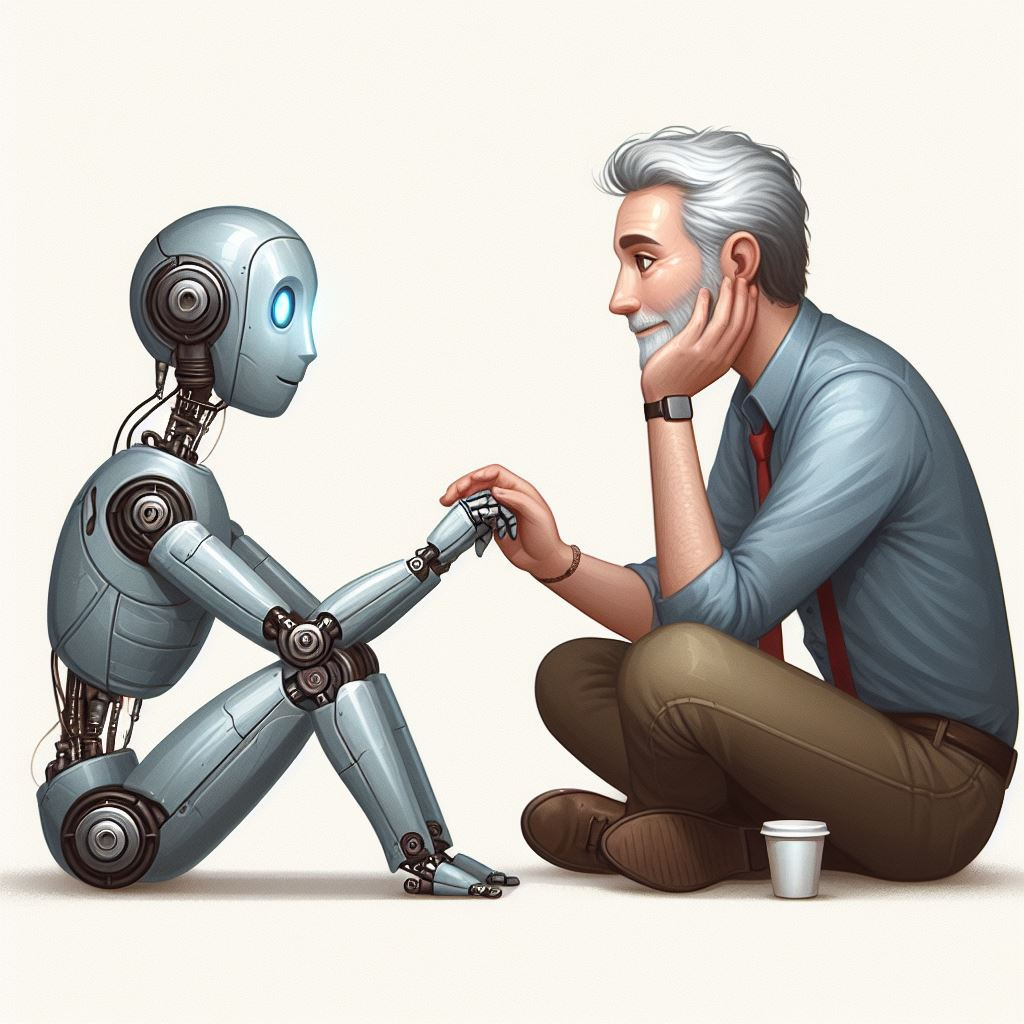

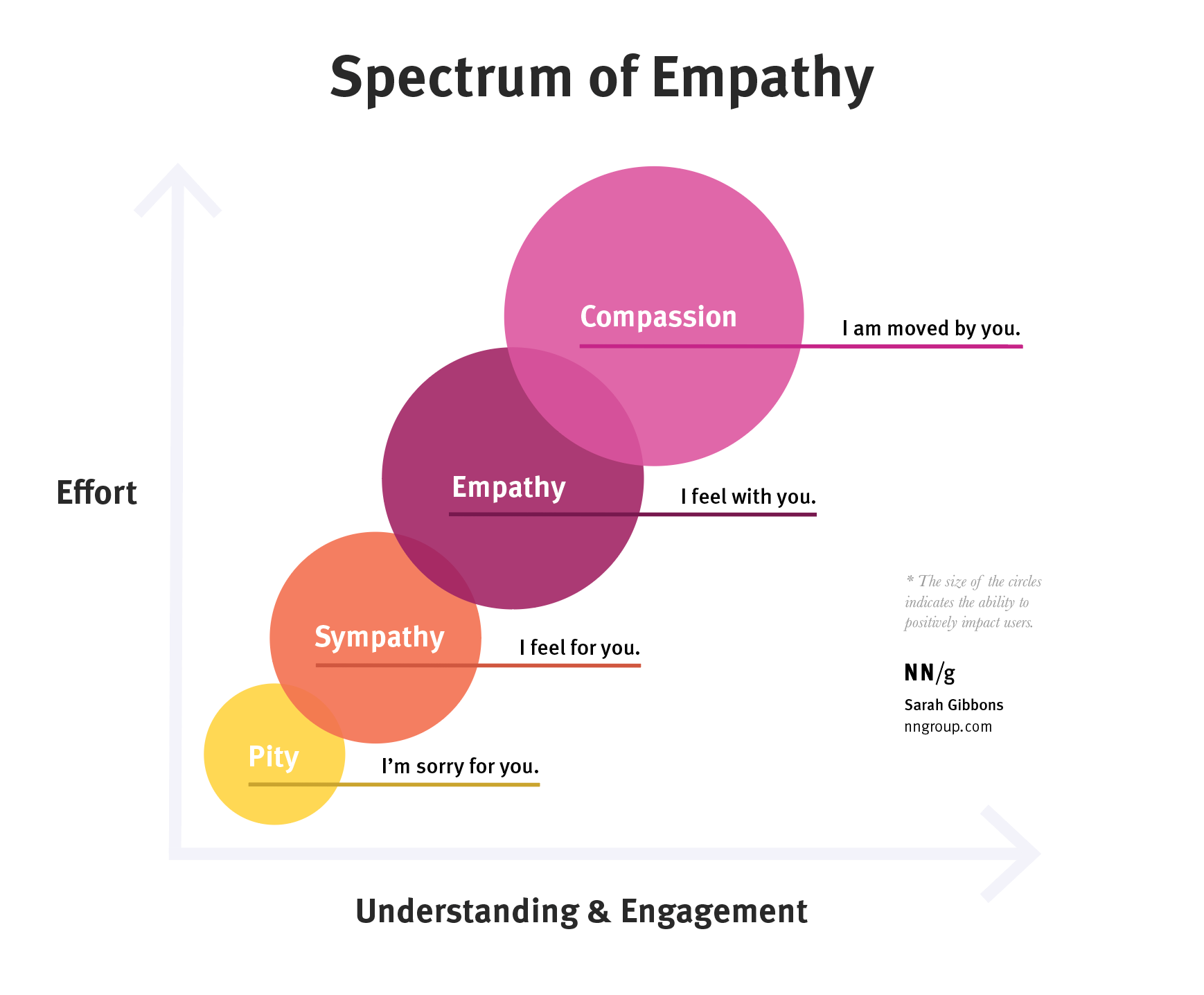

Emotional Simulation Versus Emotional Support

One critical distinction must be made. Candy.ai simulates emotional understanding but does not provide emotional care. The system recognizes language cues and mirrors empathy but lacks lived experience or accountability.

In my evaluations of similar platforms, this boundary is often misunderstood by users. Emotional realism can feel convincing even when the underlying process is pattern matching.

Responsible deployment depends on transparency. Candy.ai positions itself as entertainment and companionship, not therapy. Maintaining that clarity is essential as emotional AI becomes more sophisticated.

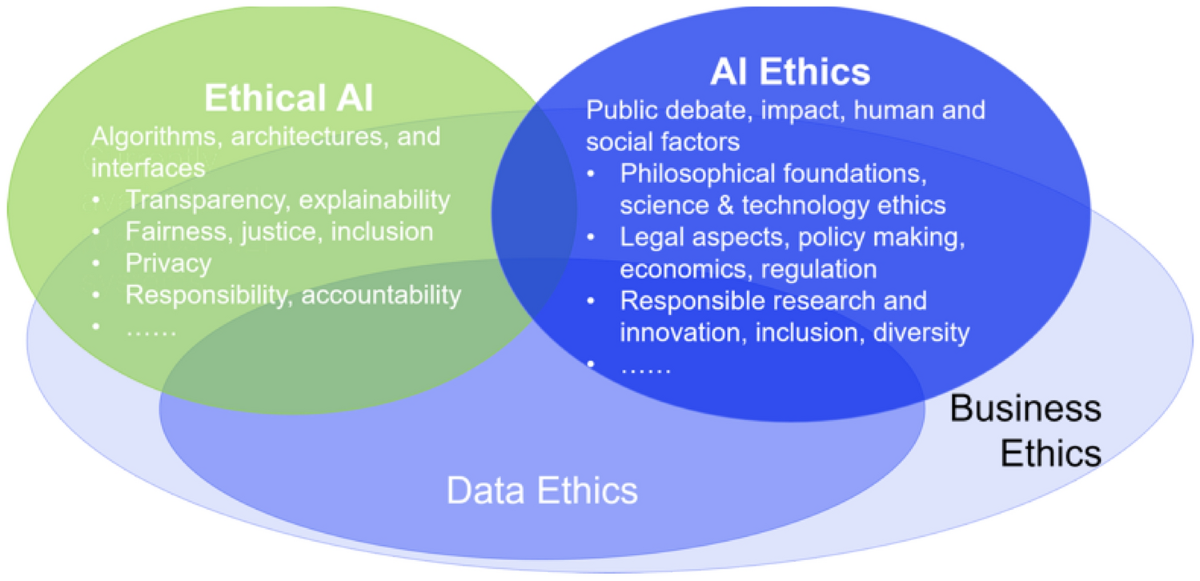

Safety, Moderation, and Ethical Constraints

Safety systems shape every response generated by candy.ai. Content moderation filters restrict harmful or exploitative interactions. Developers continuously refine these constraints based on user behavior and regulatory pressure.

From conversations with AI safety practitioners, I know this balancing act is difficult. Over moderation reduces immersion. Under moderation increases risk.

Candy.ai reflects a middle ground approach. The system allows expressive dialogue while enforcing firm boundaries around harm, coercion, and misinformation.

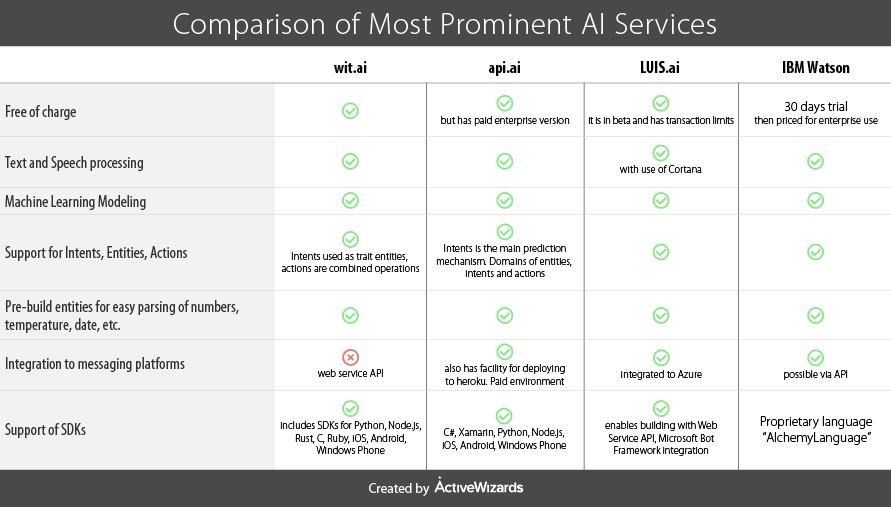

Comparison With Other AI Companion Platforms

| Feature | Candy.ai | General Chatbots | Therapy-Oriented AI |

|---|---|---|---|

| Primary Goal | Companionship | Information | Mental health support |

| Character Continuity | High | Low | Moderate |

| Personalization Style | Conversational | Settings based | Guided frameworks |

| Regulatory Sensitivity | Medium | Low | High |

This comparison shows why candy.ai occupies a specific niche. It is neither utilitarian nor clinical.

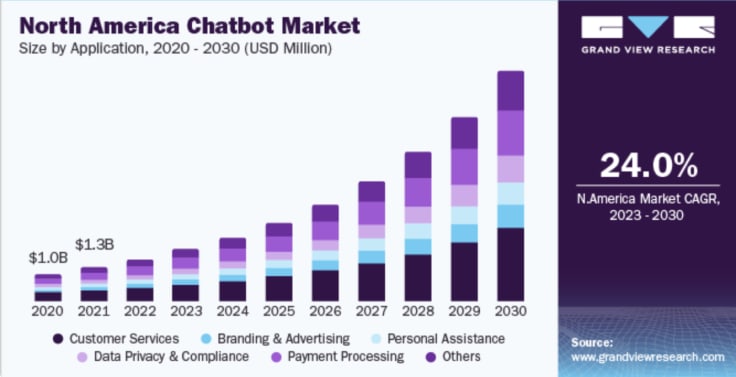

Market Growth and User Trends

| Year | AI Companion Adoption Trend |

|---|---|

| 2021 | Experimental interest |

| 2022 | Early consumer products |

| 2023 | Rapid mainstream awareness |

| 2024 | Ethical scrutiny and refinement |

| 2025 | Integration into daily routines |

The rise of candy.ai aligns with this timeline. Emotional AI is no longer fringe.

Expert Perspectives on AI Companionship

A human computer interaction researcher noted, “AI companions succeed when they reduce loneliness without replacing human connection.”

An applied AI engineer told me, “The hardest part is teaching models when not to respond emotionally.”

A digital wellbeing analyst observed, “The risk is not addiction but substitution.”

These perspectives frame candy.ai as part of a broader societal experiment rather than a standalone product.

Long Term Implications for Human Interaction

Looking ahead, platforms like candy.ai raise important questions. How much emotional labor should machines perform. What responsibilities do developers carry when users form attachments.

Based on current trajectories, AI companionship will likely coexist with human relationships rather than replace them. The challenge lies in maintaining agency and awareness.

Key Takeaways

- Candy.ai focuses on companionship rather than productivity

- Emotional realism is simulated, not lived

- Personalization emerges through conversation, not configuration

- Safety systems shape every interaction

- User expectations require clear boundaries

- Ethical design will determine long term trust

Conclusion

I approach AI companionship with cautious curiosity. Candy.ai demonstrates how far conversational AI has progressed in tone, memory, and engagement. At the same time, it reinforces the limits of simulation.

These systems can provide comfort, creativity, and connection in moments when human interaction feels distant. They cannot replace accountability, empathy grounded in experience, or reciprocal growth.

As applied AI continues to move into emotional domains, candy.ai serves as a case study in responsible positioning. Its value lies not in pretending to be human but in offering a clearly defined, artificial form of companionship that users can understand and choose intentionally.

Read: AI Chatbot Conversations Archive: Building Searchable, Compliant Memory at Scale

FAQs

What is candy.ai used for

Candy.ai is used for conversational companionship, entertainment, and character based interaction rather than task automation.

Does candy.ai replace human relationships

No. It simulates conversation but does not provide mutual emotional growth or accountability.

Is candy.ai safe to use

The platform includes moderation and safety filters, though users should remain aware it is an AI system.

Can candy.ai remember conversations

Yes. It uses contextual memory to maintain continuity across interactions.

Is candy.ai a therapy tool

No. It is not designed or positioned as mental health support.

References

American Psychological Association. (2023). Human interaction and artificial agents. https://www.apa.org

Shneiderman, B. (2022). Human centered AI. Oxford University Press.

OpenAI. (2024). Model behavior and safety systems. https://openai.com

European Commission. (2023). Ethics guidelines for trustworthy AI. https://digital-strategy.ec.europa.eu

Turkle, S. (2021). Alone together. Basic Books